Two Things Can Be True

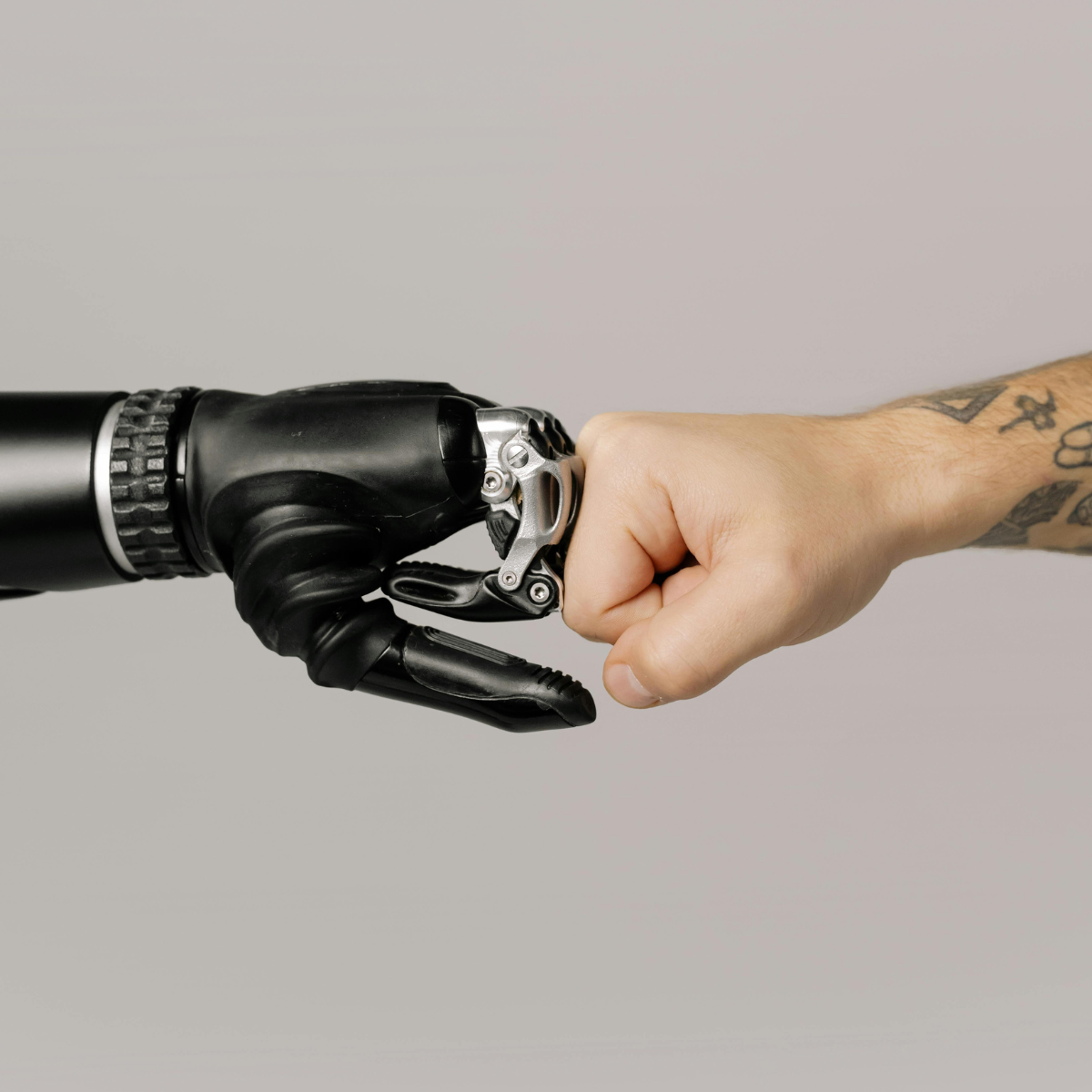

Two things can be true. We talk a lot about this on my team, and it’s never been more prevalent for me than where we are with AI. I’ll elaborate.

TRUTH #1: People, especially communicators, often have mixed feelings about admitting they use AI. In some ways, I think we feel like it’s “cheating.” Our professional lives have been dedicated to crafting the right message for the right audience, studying styles and adjusting our expression, and finding the perfect word to convey the story we want to tell that will resonate with our audience.

To admit we are using AI feels like we are saying our own talents aren’t good enough, it’s inauthentic, or even a betrayal of our fellow communicators. These are valid feelings, though they’re misplaced.

Partnering with AI is actually one of the most responsible behaviors you can engage in right now. To not partner with AI is professional short-sightedness because, as Jeremy Utley* (2025) puts it, “refusing tools that make your work better is professional negligence.”

Not only should we be getting our reps in to practice how to utilize AI best so we can engage responsibly, but it’s arrogant to assume that it doesn’t help us ideate.

A friend of mine and I have actually given names to some of the AI models we interact with the most. Silly? Maybe. But it makes the model feel more like a teammate than a tool. Just like we’d ask, “Did you run that by [trusted confidant’s name]?” we’ll ask, “Did you ask [AI model’s name]?”

But here’s where the other truth comes in – sometimes, our eagerness to use AI can go too far.

TRUTH 2: Don’t use AI models to do your critical thinking. Again, I’ll explain.

Francis** asked for my help with a project and asked me to help them craft a narrative. I was more than willing to lend a hand! Upon handing over my thoughts and draft, Francis says, “Thanks! I’m gonna run this through ChatGPT to make sure we captured everything.”

Obviously, I love using AI models to help me spot weaknesses in my own thinking and writing. Those closest to me love it too since I don’t constantly ask for their feedback. So I was absolutely on board with this approach.

Francis sent back ChatGPT’s critical edits and asked for my thoughts, clearly impressed by the revision that ChatGPT provided. It sounded impressive, and there were definitely some enhancements that could be incorporated. But it didn’t capture the essence of what we were trying to say. It skewed the essential, foundational message we were trying to convey.

There’s even a term for what happened. Harvard Business Review calls it “workslop,” meaning AI-generated work that masquerades as good work but lacks the substance to meaningfully advance a given task (Niederhoffer et al., 2025).

HBR also discusses the “workslop tax,” which is the time lost rewriting AI-generated content that should have been done correctly in the first place. You can read more about the workslop tax here. As a result, this “tax” has employees thinking less of each other***. No wonder we’re all afraid to admit to using AI in the workplace!

The problem is not that we are using AI; it’s how we are using AI. Are we thinking critically during our engagement? How effectively are we prompting the model? Are we editing and refining the version presented to us?

OpenAI has over 9 million followers on LinkedIn. How is it that some people have a better ChatGPT output than others? Because they know how to effectively prompt, refine, and edit the AI version for the exact goals they are trying to achieve.

Did Francis’s message ultimately land with their audience? Time will tell. In the meantime, let’s stop letting AI think for us and, instead, use models as a support, not a crutch.

So, where’s the harmony? It’s in how we approach the tool. Using AI responsibly means partnering with it while maintaining ownership of our thinking. AI won’t replace the best communicators; they’ll be the ones who know how to collaborate with it thoughtfully.

As I said, two things can be true. However, perhaps the reason we feel uncomfortable admitting our AI usage is because deep down, we know we can utilize it more effectively, and that’s the real opportunity. We want to be responsible, after all.

And yes, before anyone asks, this post was AI-assisted. But don’t worry, I did the thinking myself. Two things can be true.

*If you haven’t checked out Jeremy’s newsletter, what are you even doing with your life?

**Names have been changed to protect the guilty. I don’t know a Francis. If I do know a Francis, obviously, we aren’t close enough for me to be referring to you, since I didn’t even remember I knew someone named Francis.

***See my previous post on “sloppers.”